|

Test of Time Award

|

Test Of Time

Shaoqing Ren · Kaiming He · Ross Girshick · Jian Sun

Exhibit Hall F,G,H

Abstract

[Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks (Test of Time Award)](https://papers.neurips.cc/paper_files/paper/2015/hash/14bfa6bb14875e45bba028a21ed38046-Abstract.html) *haoqing Ren, Kaiming He, Ross Girshick, Jian Sun*

<strong>Paper Abstract</strong>: State-of-the-art object detection networks depend on region proposal algorithms to hypothesize object locations. Advances like SPPnet and Fast R-CNN have reduced the running time of these detection networks, exposing region proposal computation as a bottleneck. In this work, we introduce a Region Proposal Network (RPN) that shares full-image convolutional features with the detection network, thus enabling nearly cost-free region proposals. An RPN is a fully-convolutional network that simultaneously predicts object bounds and objectness scores at each position. RPNs are trained end-to-end to generate high-quality region proposals, which are used by Fast R-CNN for detection. With a simple alternating optimization, RPN and Fast R-CNN can be trained to share convolutional features. For the very deep VGG-16 model, our detection system has a frame rate of 5fps …

|

|

Best Paper (DB track)

|

Oral Poster

Liwei Jiang · Yuanjun Chai · Margaret Li · Mickel Liu · Raymond Fok · Nouha Dziri · Yulia Tsvetkov · Maarten Sap · Yejin Choi

Exhibit Hall C,D,E

Abstract

Large language models (LMs) often struggle to generate diverse, human-like creative content, raising concerns about the long-term homogenization of human thought through repeated exposure to similar outputs. Yet scalable methods for evaluating LM output diversity remain limited, especially beyond narrow tasks such as random number or name generation, or beyond repeated sampling from a single model. To address this gap, we introduce Infinity-Chat, a large-scale dataset of 26K diverse, real-world, open-ended user queries that admit a wide range of plausible answers with no single ground truth. We introduce the first comprehensive taxonomy for characterizing the full spectrum of open-ended prompts posed to LMs, comprising 6 top-level categories (e.g., creative content generation, brainstorm & ideation) that further breaks down to 17 subcategories. Using Infinity-Chat, we present a large-scale study of mode collapse in LMs, revealing a pronounced Artificial Hivemind effect in open-ended generation of LMs, characterized by (1) intra-model repetition, where …

|

|

Best Paper

|

Oral Poster

Kevin Wang · Ishaan Javali · Michał Bortkiewicz · Tomasz Trzcinski · Benjamin Eysenbach

Exhibit Hall C,D,E

Abstract

Scaling up self-supervised learning has driven breakthroughs in language and vision, yet comparable progress has remained elusive in reinforcement learning (RL). In this paper, we study building blocks for self-supervised RL that unlock substantial improvements in scalability, with network depth serving as a critical factor. Whereas most RL papers in recent years have relied on shallow architectures (around 2 -- 5 layers), we demonstrate that increasing the depth up to 1024 layers can significantly boost performance. Our experiments are conducted in an unsupervised goal-conditioned setting, where no demonstrations or rewards are provided, so an agent must explore (from scratch) and learn how to maximize the likelihood of reaching commanded goals. Evaluated on simulated locomotion and manipulation tasks, our approach increases performance on the self-supervised contrastive RL algorithm by $2\times$ -- $50\times$, outperforming other goal-conditioned baselines. Increasing the model depth not only increases success rates but also qualitatively changes the behaviors …

|

|

Best Paper Runner-up

|

Oral Poster

Yizhou Liu · Ziming Liu · Jeff Gore

Exhibit Hall C,D,E

Abstract

The success of today's large language models (LLMs) depends on the observation that larger models perform better. However, the origin of this neural scaling law, that loss decreases as a power law with model size, remains unclear. We propose that representation superposition, meaning that LLMs represent more features than they have dimensions, can be a key contributor to loss and cause neural scaling. Based on Anthropic's toy model, we use weight decay to control the degree of superposition, allowing us to systematically study how loss scales with model size. When superposition is weak, the loss follows a power law only if data feature frequencies are power-law distributed. In contrast, under strong superposition, the loss generically scales inversely with model dimension across a broad class of frequency distributions, due to geometric overlaps between representation vectors. We confirmed that open-sourced LLMs operate in the strong superposition regime and have loss scaling inversely …

|

|

Best Paper Runner-up

|

Oral Poster

Yang Yue · Zhiqi Chen · Rui Lu · Andrew Zhao · Zhaokai Wang · Yang Yue · Shiji Song · Gao Huang

Exhibit Hall C,D,E

Abstract

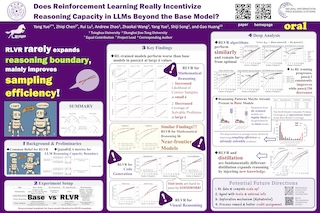

Reinforcement Learning with Verifiable Rewards (RLVR) has recently demonstrated notable success in enhancing the reasoning performance of large language models (LLMs), particularly in mathematics and programming tasks. It is widely believed that, similar to how traditional RL helps agents to explore and learn new strategies, RLVR enables LLMs to continuously self-improve, thus acquiring novel reasoning abilities that exceed the capacity of the corresponding base models. In this study, we take a critical look at \textit{the current state of RLVR} by systematically probing the reasoning capability boundaries of RLVR-trained LLMs across diverse model families, RL algorithms, and math/coding/visual reasoning benchmarks, using pass@\textit{k} at large \textit{k} values as the evaluation metric. While RLVR improves sampling efficiency towards the correct path, we surprisingly find that current training does \emph{not} elicit fundamentally new reasoning patterns. We observe that while RLVR-trained models outperform their base models at smaller values of $k$ (\eg, $k$=1), base models …

|

|

Best Paper

|

Oral Poster

Tony Bonnaire · Raphaël Urfin · Giulio Biroli · Marc Mezard

Exhibit Hall C,D,E

Abstract

Diffusion models have achieved remarkable success across a wide range of generative tasks. A key challenge is understanding the mechanisms that prevent their memorization of training data and allow generalization. In this work, we investigate the role of the training dynamics in the transition from generalization to memorization. Through extensive experiments and theoretical analysis, we identify two distinct timescales: an early time $\tau_\mathrm{gen}$ at which models begin to generate high-quality samples, and a later time $\tau_\mathrm{mem}$ beyond which memorization emerges. Crucially, we find that $\tau_\mathrm{mem}$ increases linearly with the training set size $n$, while $\tau_\mathrm{gen}$ remains constant. This creates a growing window of training times with $n$ where models generalize effectively, despite showing strong memorization if training continues beyond it. It is only when $n$ becomes larger than a model-dependent threshold that overfitting disappears at infinite training times. These findings reveal a form of implicit dynamical regularization in the training …

|

|

Best Paper

|

Oral Poster

Zihan Qiu · Zekun Wang · Bo Zheng · Zeyu Huang · Kaiyue Wen · Songlin Yang · Rui Men · Le Yu · Fei Huang · Suozhi Huang · Dayiheng Liu · Jingren Zhou · Junyang Lin

Exhibit Hall C,D,E

Abstract

Gating mechanisms have been widely utilized, from early models like LSTMs and Highway Networks to recent state space models, linear attention, and also softmax attention. Yet, existing literature rarely examines the specific effects of gating. In this work, we conduct comprehensive experiments to systematically investigate gating-augmented softmax attention variants. Specifically, we perform a comprehensive comparison over 30 variants of 15B Mixture-of-Experts (MoE) models and 1.7B dense models trained on a 3.5 trillion token dataset. Our central finding is that a simple modification—applying a head-specific sigmoid gate after the Scaled Dot-Product Attention (SDPA)—consistently improves performance. This modification also enhances training stability, tolerates larger learning rates, and improves scaling properties. By comparing various gating positions and computational variants, we attribute this effectiveness to two key factors: (1) introducing non-linearity upon the low-rank mapping in the softmax attention, and (2) applying query-dependent sparse gating scores to modulate the SDPA output. Notably, we find …

|

|

Best Paper Runner-up

|

Oral Poster

Zachary Chase · Steve Hanneke · Shay Moran · Jonathan Shafer

Exhibit Hall C,D,E

Abstract

We resolve a 30-year-old open problem concerning the power of unlabeled data in online learning by tightly quantifying the gap between transductive and standard online learning. We prove that for every concept class $\mathcal{H}$ with Littlestone dimension $d$, the transductive mistake bound is at least $\Omega(\sqrt{d})$. This establishes an exponential improvement over previous lower bounds of $\Omega(\log \log d)$, $\Omega(\sqrt{\log d})$, and $\Omega(\log d)$, respectively due to Ben-David, Kushilevitz, and Mansour (1995, 1997) and Hanneke, Moran, and Shafer (2023). We also show that our bound is tight: for every $d$, there exists a class of Littlestone dimension $d$ with transductive mistake bound $O(\sqrt{d})$. Our upper bound also improves the previous best known upper bound of $(2/3) \cdot d$ from Ben-David et al. (1997). These results demonstrate a quadratic gap between transductive and standard online learning, thereby highlighting the benefit of advanced access to the unlabeled instance sequence. This stands in …

|

Successful Page Load