Poster

Yuqi Wang · Ke Cheng · Jiawei He · Qitai Wang · Hengchen Dai · Yuntao Chen · Fei Xia · ZHAO-XIANG ZHANG

[ West Ballroom A-D ]

Abstract

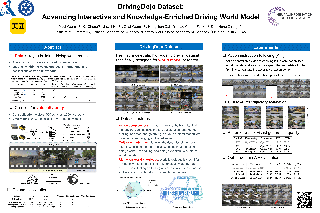

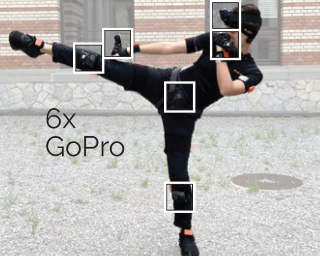

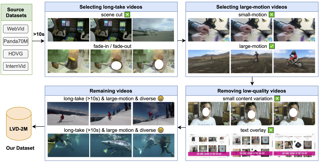

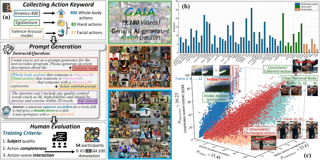

Driving world models have gained increasing attention due to their ability to model complex physical dynamics. However, their superb modeling capability is yet to be fully unleashed due to the limited video diversity in current driving datasets. We introduce DrivingDojo, the first dataset tailor-made for training interactive world models with complex driving dynamics. Our dataset features video clips with a complete set of driving maneuvers, diverse multi-agent interplay, and rich open-world driving knowledge, laying a stepping stone for future world model development. We further define an action instruction following (AIF) benchmark for world models and demonstrate the superiority of the proposed dataset for generating action-controlled future predictions.

Poster

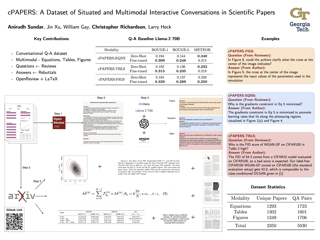

Shraman Pramanick · Rama Chellappa · Subhashini Venugopalan

[ East Exhibit Hall A-C ]

Abstract

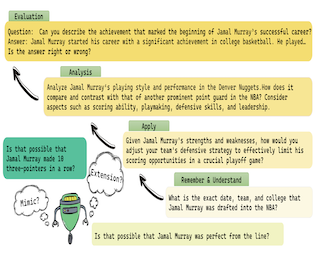

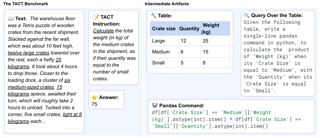

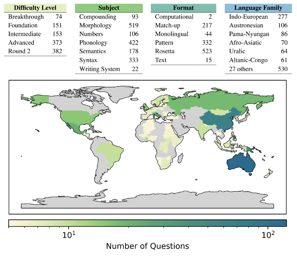

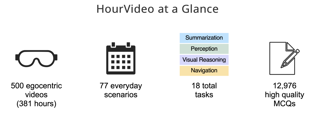

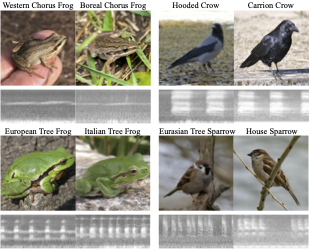

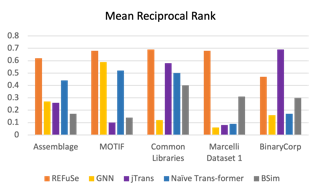

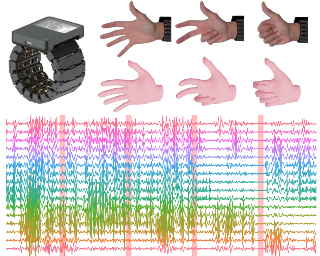

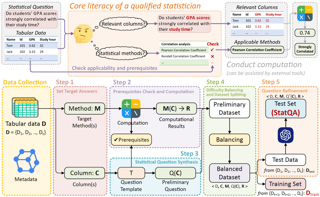

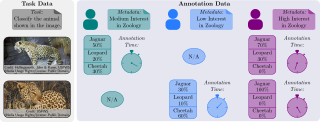

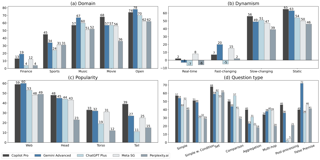

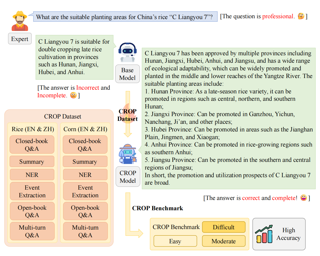

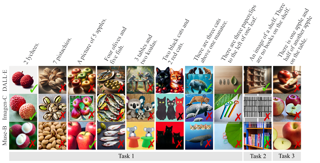

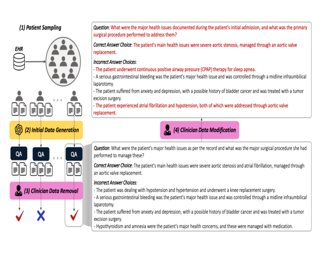

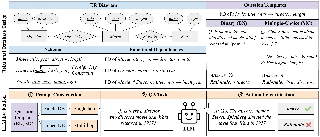

Seeking answers to questions within long scientific research articles is a crucial area of study that aids readers in quickly addressing their inquiries. However, existing question-answering (QA) datasets based on scientific papers are limited in scale and focus solely on textual content. We introduce SPIQA (Scientific Paper Image Question Answering), the first large-scale QA dataset specifically designed to interpret complex figures and tables within the context of scientific research articles across various domains of computer science. Leveraging the breadth of expertise and ability of multimodal large language models (MLLMs) to understand figures, we employ automatic and manual curation to create the dataset. We craft an information-seeking task on interleaved images and text that involves multiple images covering a wide variety of plots, charts, tables, schematic diagrams, and result visualizations. SPIQA comprises 270K questions divided into training, validation, and three different evaluation splits. Through extensive experiments with 12 prominent foundational models, we evaluate the ability of current multimodal systems to comprehend the nuanced aspects of research articles. Additionally, we propose a Chain-of-Thought (CoT) evaluation strategy with in-context retrieval that allows fine-grained, step-by-step assessment and improves model performance. We further explore the upper bounds of performance enhancement with additional textual information, highlighting …

Poster

Sahar Abdelnabi · Amr Gomaa · Sarath Sivaprasad · Lea Schönherr · Mario Fritz

[ West Ballroom A-D ]

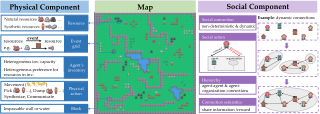

Abstract

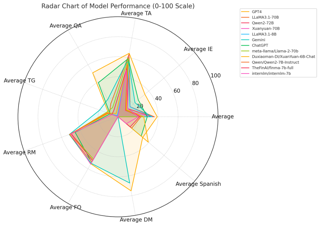

There is a growing interest in using Large Language Models (LLMs) in multi-agent systems to tackle interactive real-world tasks that require effective collaboration and assessing complex situations. Yet, we have a limited understanding of LLMs' communication and decision-making abilities in multi-agent setups. The fundamental task of negotiation spans many key features of communication, such as cooperation, competition, and manipulation potentials. Thus, we propose using scorable negotiation to evaluate LLMs. We create a testbed of complex multi-agent, multi-issue, and semantically rich negotiation games. To reach an agreement, agents must have strong arithmetic, inference, exploration, and planning capabilities while integrating them in a dynamic and multi-turn setup. We propose metrics to rigorously quantify agents' performance and alignment with the assigned role. We provide procedures to create new games and increase games' difficulty to have an evolving benchmark. Importantly, we evaluate critical safety aspects such as the interaction dynamics between agents influenced by greedy and adversarial players. Our benchmark is highly challenging; GPT-3.5 and small models mostly fail, and GPT-4 and SoTA large models (e.g., Llama-3 70b) still underperform in reaching agreement in non-cooperative and more difficult games.

Oral Poster

Hannah Rose Kirk · Alexander Whitefield · Paul Rottger · Andrew M. Bean · Katerina Margatina · Rafael Mosquera-Gomez · Juan Ciro · Max Bartolo · Adina Williams · He He · Bertie Vidgen · Scott Hale

[ West Ballroom A-D ]

Abstract

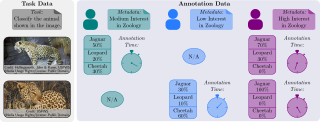

Human feedback is central to the alignment of Large Language Models (LLMs). However, open questions remain about the methods (how), domains (where), people (who) and objectives (to what end) of feedback processes. To navigate these questions, we introduce PRISM, a new dataset which maps the sociodemographics and stated preferences of 1,500 diverse participants from 75 countries, to their contextual preferences and fine-grained feedback in 8,011 live conversations with 21 LLMs. With PRISM, we contribute (i) wider geographic and demographic participation in feedback; (ii) census-representative samples for two countries (UK, US); and (iii) individualised ratings that link to detailed participant profiles, permitting personalisation and attribution of sample artefacts. We target subjective and multicultural perspectives on value-laden and controversial issues, where we expect interpersonal and cross-cultural disagreement. We use PRISM in three case studies to demonstrate the need for careful consideration of which humans provide alignment data.

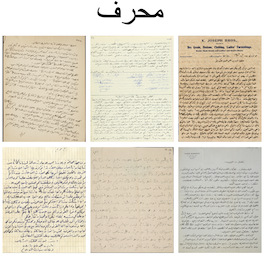

Poster

Mehreen Saeed · Adrian Chan · Anupam Mijar · joseph Moukarzel · Gerges Habchi · Carlos Younes · amin elias · Chau-Wai Wong · Akram Khater

[ West Ballroom A-D ]

Abstract

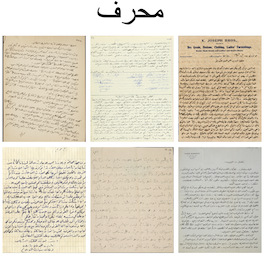

We present the Manuscripts of Handwritten Arabic (Muharaf) dataset, which is a machine learning dataset consisting of more than 1,600 historic handwritten page images transcribed by experts in archival Arabic. Each document image is accompanied by spatial polygonal coordinates of its text lines as well as basic page elements. This dataset was compiled to advance the state of the art in handwritten text recognition (HTR), not only for Arabic manuscripts but also for cursive text in general. The Muharaf dataset includes diverse handwriting styles and a wide range of document types, including personal letters, diaries, notes, poems, church records, and legal correspondences. In this paper, we describe the data acquisition pipeline, notable dataset features, and statistics. We also provide a preliminary baseline result achieved by training convolutional neural networks using this data.

Poster

Jovin Leong · Koa Di · Benjamin Cham · Shaun Heng

[ West Ballroom A-D ]

Abstract

A frequent problem in vision-based reasoning tasks such as object detection and optical character recognition (OCR) is the persistence of specular highlights. Specular highlights appear as bright spots of glare that occur due to the concentrated reflection of light; these spots manifest as image artifacts which occlude computer vision models and are challenging to reconstruct. Despite this, specular highlight removal receives relatively little attention due to the difficulty of acquiring high-quality, real-world data. We introduce a method to generate specular highlight data with near-perfect alignment and present SHDocs—a dataset of specular highlights on document images created using our method. Through our benchmark, we demonstrate that our dataset enables us to surpass the performance of state-of-the-art specular highlight removal models and downstream OCR tasks. We release our dataset, code, and methods publicly to motivate further exploration of image enhancement for practical computer vision challenges.

Poster

MaryBeth Defrance · Maarten Buyl · Tijl De Bie

[ West Ballroom A-D ]

Abstract

Numerous methods have been implemented that pursue fairness with respect to sensitive features by mitigating biases in machine learning. Yet, the problem settings that each method tackles vary significantly, including the stage of intervention, the composition of sensitive features, the fairness notion, and the distribution of the output. Even in binary classification, the greatest common denominator of problem settings is small, significantly complicating benchmarking.Hence, we introduce ABCFair, a benchmark approach which allows adapting to the desiderata of the real-world problem setting, enabling proper comparability between methods for any use case. We apply this benchmark to a range of pre-, in-, and postprocessing methods on both large-scale, traditional datasets and on a dual label (biased and unbiased) dataset to sidestep the fairness-accuracy trade-off.

Poster

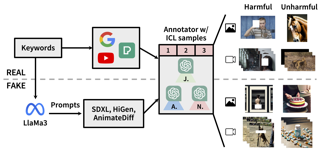

Anisha Pal · Julia Kruk · Mansi Phute · Manognya Bhattaram · Diyi Yang · Duen Horng Chau · Judy Hoffman

[ West Ballroom A-D ]

Abstract

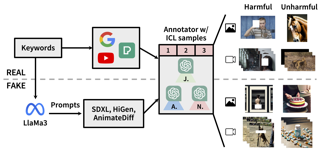

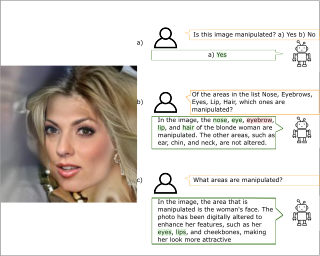

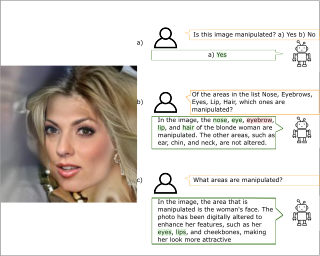

Text-to-image diffusion models have impactful applications in art, design, and entertainment, yet these technologies also pose significant risks by enabling the creation and dissemination of misinformation. Although recent advancements have produced AI-generated image detectors that claim robustness against various augmentations, their true effectiveness remains uncertain. Do these detectors reliably identify images with different levels of augmentation? Are they biased toward specific scenes or data distributions? To investigate, we introduce **Semi-Truths**, featuring $27,600$ real images, $223,400$ masks, and $1, 329, 155$ AI-augmented images that feature targeted and localized perturbations produced using diverse augmentation techniques, diffusion models, and data distributions. Each augmented image is accompanied by metadata for standardized and targeted evaluation of detector robustness. Our findings suggest that state-of-the-art detectors exhibit varying sensitivities to the types and degrees of perturbations, data distributions, and augmentation methods used, offering new insights into their performance and limitations. The code for the augmentation and evaluation pipeline is available at https://github.com/J-Kruk/SemiTruths.

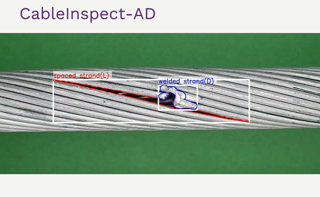

Poster

Akshatha Arodi · Margaux Luck · Jean-Luc Bedwani · Aldo Zaimi · Ge Li · Nicolas Pouliot · Julien Beaudry · Gaetan Marceau Caron

[ West Ballroom A-D ]

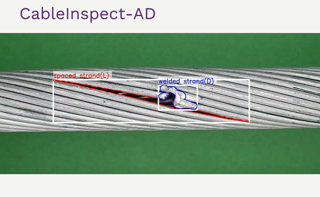

Abstract

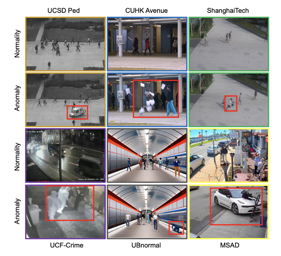

Machine learning models are increasingly being deployed in real-world contexts. However, systematic studies on their transferability to specific and critical applications are underrepresented in the research literature. An important example is visual anomaly detection (VAD) for robotic power line inspection. While existing VAD methods perform well in controlled environments, real-world scenarios present diverse and unexpected anomalies that current datasets fail to capture. To address this gap, we introduce CableInspect-AD, a high-quality, publicly available dataset created and annotated by domain experts from Hydro-Québec, a Canadian public utility. This dataset includes high-resolution images with challenging real-world anomalies, covering defects with varying severity levels. To address the challenges of collecting diverse anomalous and nominal examples for setting a detection threshold, we propose an enhancement to the celebrated PatchCore algorithm. This enhancement enables its use in scenarios with limited labeled data. We also present a comprehensive evaluation protocol based on cross-validation to assess models' performances. We evaluate our Enhanced-PatchCore for few-shot and many-shot detection, and Vision-Language Models for zero-shot detection. While promising, these models struggle to detect all anomalies, highlighting the dataset's value as a challenging benchmark for the broader research community. Project page: https://mila-iqia.github.io/cableinspect-ad/.

Poster

Houlun Chen · Xin Wang · Hong Chen · Zeyang Zhang · Wei Feng · Bin Huang · Jia Jia · Wenwu Zhu

[ West Ballroom A-D ]

Abstract

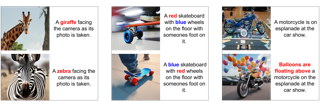

Existing Video Corpus Moment Retrieval (VCMR) is limited to coarse-grained understanding that hinders precise video moment localization when given fine-grained queries. In this paper, we propose a more challenging fine-grained VCMR benchmark requiring methods to localize the best-matched moment from the corpus with other partially matched candidates. To improve the dataset construction efficiency and guarantee high-quality data annotations, we propose VERIFIED, an automatic \underline{V}id\underline{E}o-text annotation pipeline to generate captions with \underline{R}el\underline{I}able \underline{FI}n\underline{E}-grained statics and \underline{D}ynamics. Specifically, we resort to large language models (LLM) and large multimodal models (LMM) with our proposed Statics and Dynamics Enhanced Captioning modules to generate diverse fine-grained captions for each video. To filter out the inaccurate annotations caused by the LLM hallucination, we propose a Fine-Granularity Aware Noise Evaluator where we fine-tune a video foundation model with disturbed hard-negatives augmented contrastive and matching losses. With VERIFIED, we construct a more challenging fine-grained VCMR benchmark containing Charades-FIG, DiDeMo-FIG, and ActivityNet-FIG which demonstrate a high level of annotation quality. We evaluate several state-of-the-art VCMR models on the proposed dataset, revealing that there is still significant scope for fine-grained video understanding in VCMR.

Poster

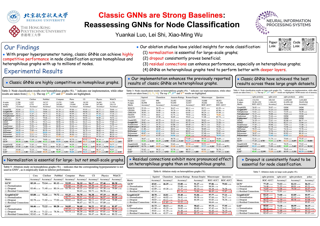

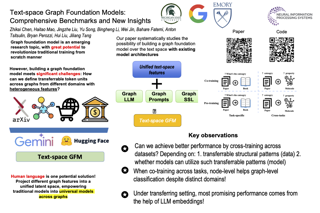

Jiasheng Zhang · Jialin Chen · Menglin Yang · Aosong Feng · Shuang Liang · Jie Shao · Rex Ying

[ West Ballroom A-D ]

Abstract

Dynamic text-attributed graphs (DyTAGs) are prevalent in various real-world scenarios, where each node and edge are associated with text descriptions, and both the graph structure and text descriptions evolve over time. Despite their broad applicability, there is a notable scarcity of benchmark datasets tailored to DyTAGs, which hinders the potential advancement in many research fields. To address this gap, we introduce Dynamic Text-attributed Graph Benchmark (DTGB), a collection of large-scale, time-evolving graphs from diverse domains, with nodes and edges enriched by dynamically changing text attributes and categories. To facilitate the use of DTGB, we design standardized evaluation procedures based on four real-world use cases: future link prediction, destination node retrieval, edge classification, and textual relation generation. These tasks require models to understand both dynamic graph structures and natural language, highlighting the unique challenges posed by DyTAGs. Moreover, we conduct extensive benchmark experiments on DTGB, evaluating 7 popular dynamic graph learning algorithms and their variants of adapting to text attributes with LLM embeddings, along with 6 powerful large language models (LLMs). Our results show the limitations of existing models in handling DyTAGs. Our analysis also demonstrates the utility of DTGB in investigating the incorporation of structural and textual dynamics. The proposed …

Poster

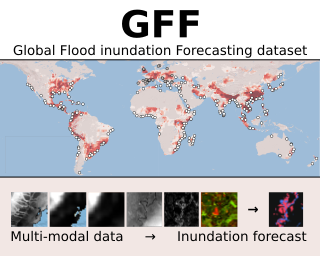

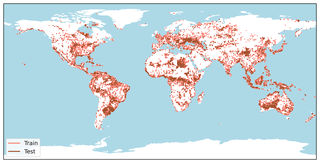

Matthew Allen · Francisco Dorr · Joseph Alejandro Gallego Mejia · Laura Martínez-Ferrer · Anna Jungbluth · Freddie Kalaitzis · Raul Ramos-Pollán

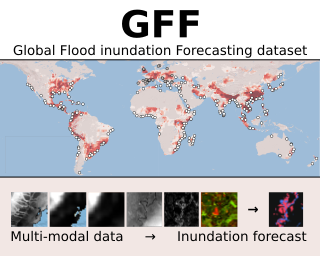

[ East Exhibit Hall A-C ]

Abstract

Satellite-based remote sensing has revolutionised the way we address global challenges in a rapidly evolving world. Huge quantities of Earth Observation (EO) data are generated by satellite sensors daily, but processing these large datasets for use in ML pipelines is technically and computationally challenging. Specifically, different types of EO data are often hosted on a variety of platforms, withdiffering degrees of availability for Python preprocessing tools. In addition, spatial alignment across data sources and data tiling for easier handling can present significant technical hurdles for novice users. While some preprocessed Earth observation datasets exist, their content is often limited to optical or near-optical wavelength data, which is ineffective at night or in adverse weather conditions.Synthetic Aperture Radar (SAR), an active sensing technique based on microwave length radiation, offers a viable alternative. However, the application of machine learning to SAR has been limited due to a lack of ML-ready data and pipelines, particularly for the full diversity of SAR data, including polarimetry, coherence and interferometry. In this work, we introduce M3LEO, a multi-modal, multi-labelEarth observation dataset that includes polarimetric, interferometric, and coherence SAR data derived from Sentinel-1, alongside multispectral Sentinel-2 imagery and a suite of auxiliary data describing terrain properties such …

Poster

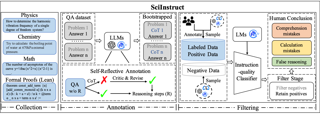

Dan Zhang · Ziniu Hu · Sining Zhoubian · Zhengxiao Du · Kaiyu Yang · Zihan Wang · Yisong Yue · Yuxiao Dong · Jie Tang

[ West Ballroom A-D ]

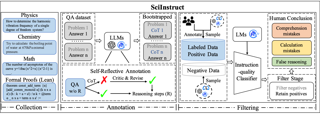

Abstract

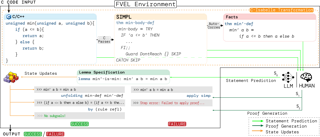

Large Language Models (LLMs) have shown promise in assisting scientific discovery. However, such applications are currently limited by LLMs' deficiencies in understanding intricate scientific concepts, deriving symbolic equations, and solving advanced numerical calculations. To bridge these gaps, we introduce SciInstruct, a suite of scientific instructions for training scientific language models capable of college-level scientific reasoning. Central to our approach is a novel self-reflective instruction annotation framework to address the data scarcity challenge in the science domain. This framework leverages existing LLMs to generate step-by-step reasoning for unlabelled scientific questions, followed by a process of self-reflective critic-and-revise. Applying this framework, we curated a diverse and high-quality dataset encompassing physics, chemistry, math, and formal proofs. We analyze the curated SciInstruct from multiple interesting perspectives (e.g., domain, scale, source, question type, answer length, etc.). To verify the effectiveness of SciInstruct, we fine-tuned different language models with SciInstruct, i.e., ChatGLM3 (6B and 32B), Llama3-8B-Instruct, and Mistral-7B: MetaMath, enhancing their scientific and mathematical reasoning capabilities, without sacrificing the language understanding capabilities of the base model. We release all codes and SciInstruct at https://github.com/THUDM/SciGLM.

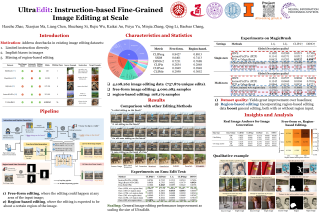

Poster

Haozhe Zhao · Xiaojian (Shawn) Ma · Liang Chen · Shuzheng Si · Rujie Wu · Kaikai An · Peiyu Yu · Minjia Zhang · Qing Li · Baobao Chang

[ West Ballroom A-D ]

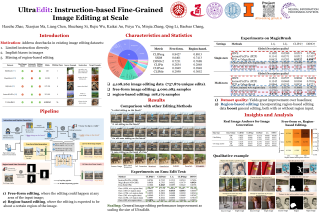

Abstract

This paper presents UltraEdit, a large-scale (~ 4M editing samples), automatically generated dataset for instruction-based image editing. Our key idea is to address the drawbacks in existing image editing datasets like InstructPix2Pix and MagicBrush, and provide a *systematic* approach to producing massive and high-quality image editing samples: 1) UltraEdit includes more diverse editing instructions by combining LLM creativity and in-context editing examples by human raters; 2) UltraEdit is anchored on real images (photographs or artworks), which offers more diversity and less biases than those purely synthesized by text-to-image models; 3) UltraEdit supports region-based editing with high-quality, automatically produced region annotations. Our experiments show that canonical diffusion-based editing baselines trained on UltraEdit set new records on challenging MagicBrush and Emu-Edit benchmarks, respectively. Our analysis further confirms the crucial role of real image anchors and region-based editing data. The dataset, code, and models will be made public.

Poster

Qingyun Sun · Ziying Chen · Beining Yang · Cheng Ji · Xingcheng Fu · Sheng Zhou · Hao Peng · Jianxin Li · Philip S Yu

[ West Ballroom A-D ]

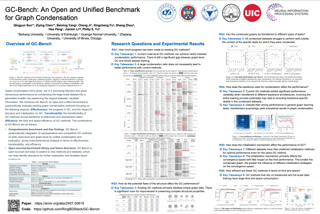

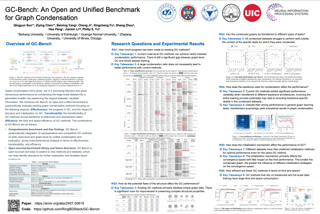

Abstract

Graph condensation (GC) has recently garnered considerable attention due to its ability to reduce large-scale graph datasets while preserving their essential properties. The core concept of GC is to create a smaller, more manageable graph that retains the characteristics of the original graph. Despite the proliferation of graph condensation methods developed in recent years, there is no comprehensive evaluation and in-depth analysis, which creates a great obstacle to understanding the progress in this field. To fill this gap, we develop a comprehensive Graph Condensation Benchmark (GC-Bench) to analyze the performance of graph condensation in different scenarios systematically. Specifically, GC-Bench systematically investigates the characteristics of graph condensation in terms of the following dimensions: effectiveness, transferability, and complexity. We comprehensively evaluate 12 state-of-the-art graph condensation algorithms in node-level and graph-level tasks and analyze their performance in 12 diverse graph datasets. Further, we have developed an easy-to-use library for training and evaluating different GC methods to facilitate reproducible research.The GC-Bench library is available at https://github.com/RingBDStack/GC-Bench.

Poster

Rhea Sukthanker · Arber Zela · Benedikt Staffler · Aaron Klein · Lennart Purucker · Jörg Franke · Frank Hutter

[ West Ballroom A-D ]

Abstract

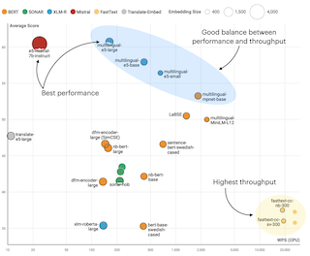

The increasing size of language models necessitates a thorough analysis across multiple dimensions to assess trade-offs among crucial hardware metrics such as latency, energy consumption, GPU memory usage, and performance. Identifying optimal model configurations under specific hardware constraints is becoming essential but remains challenging due to the computational load of exhaustive training and evaluation on multiple devices. To address this, we introduce HW-GPT-Bench, a hardware-aware benchmark that utilizes surrogate predictions to approximate various hardware metrics across 13 devices of architectures in the GPT-2 family, with architectures containing up to 1.55B parameters. Our surrogates, via calibrated predictions and reliable uncertainty estimates, faithfully model the heteroscedastic noise inherent in the energy and latency measurements. To estimate perplexity, we employ weight-sharing techniques from Neural Architecture Search (NAS), inheriting pretrained weights from the largest GPT-2 model. Finally, we demonstrate the utility of HW-GPT-Bench by simulating optimization trajectories of various multi-objective optimization algorithms in just a few seconds.

Spotlight Poster

Hao Shao · Shengju Qian · Han Xiao · Guanglu Song · ZHUOFAN ZONG · Letian Wang · Yu Liu · Hongsheng Li

[ West Ballroom A-D ]

Abstract

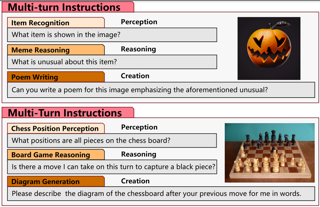

Multi-Modal Large Language Models (MLLMs) have demonstrated impressive performance in various VQA tasks. However, they often lack interpretability and struggle with complex visual inputs, especially when the resolution of the input image is high or when the interested region that could provide key information for answering the question is small. To address these challenges, we collect and introduce the large-scale Visual CoT dataset comprising 438k question-answer pairs, annotated with intermediate bounding boxes highlighting key regions essential for answering the questions. Additionally, about 98k pairs of them are annotated with detailed reasoning steps. Importantly, we propose a multi-turn processing pipeline that dynamically focuses on visual inputs and provides interpretable thoughts. We also introduce the related benchmark to evaluate the MLLMs in scenarios requiring specific local region identification.Extensive experiments demonstrate the effectiveness of our framework and shed light on better inference strategies. The Visual CoT dataset, benchmark, and pre-trained models are available on this [website](https://hao-shao.com/projects/viscot.html) to support further research in this area.

Poster

Thorben Werner · Johannes Burchert · Maximilian Stubbemann · Lars Schmidt-Thieme

[ West Ballroom A-D ]

Abstract

Active Learning (AL) deals with identifying the most informative samples forlabeling to reduce data annotation costs for supervised learning tasks. ALresearch suffers from the fact that lifts from literature generalize poorly andthat only a small number of repetitions of experiments are conducted. To overcomethese obstacles, we propose CDALBench, the first active learning benchmarkwhich includes tasks in computer vision, natural language processing and tabularlearning. Furthermore, by providing an efficient, greedy oracle, CDALBenchcan be evaluated with 50 runs for each experiment. We show, that both thecross-domain character and a large amount of repetitions are crucial forsophisticated evaluation of AL research. Concretely, we show that thesuperiority of specific methods varies over the different domains, making itimportant to evaluate Active Learning with a cross-domain benchmark.Additionally, we show that having a large amount of runs is crucial. With onlyconducting three runs as often done in the literature, the superiority ofspecific methods can strongly vary with the specific runs. This effect is so strong, that, depending on the seed, even a well-established method's performance can be significantly better and significantlyworse than random for the same dataset.

Poster

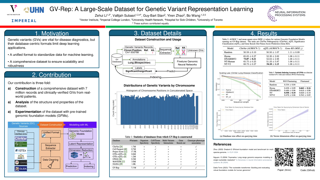

Zehui Li · Vallijah Subasri · Guy-Bart Stan · Yiren Zhao · Bo Wang

[ East Exhibit Hall A-C ]

Abstract

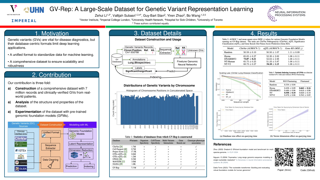

Genetic variants (GVs) are defined as differences in the DNA sequences among individuals and play a crucial role in diagnosing and treating genetic diseases. The rapid decrease in next generation sequencing cost, analogous to Moore’s Law, has led to an exponential increase in the availability of patient-level GV data. This growth poses a challenge for clinicians who must efficiently prioritize patient-specific GVs and integrate them with existing genomic databases to inform patient management. To addressing the interpretation of GVs, genomic foundation models (GFMs) have emerged. However, these models lack standardized performance assessments, leading to considerable variability in model evaluations. This poses the question: *How effectively do deep learning methods classify unknown GVs and align them with clinically-verified GVs?* We argue that representation learning, which transforms raw data into meaningful feature spaces, is an effective approach for addressing both indexing and classification challenges. We introduce a large-scale Genetic Variant dataset, named $\textsf{GV-Rep}$, featuring variable-length contexts and detailed annotations, designed for deep learning models to learn GV representations across various traits, diseases, tissue types, and experimental contexts. Our contributions are three-fold: (i) $\textbf{Construction}$ of a comprehensive dataset with 7 million records, each labeled with characteristics of the corresponding variants, alongside additional data …

Poster

Kyungeun Lee · Wonjong Rhee

[ West Ballroom A-D ]

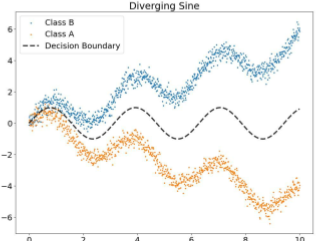

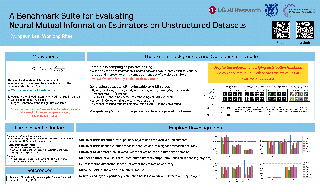

Abstract

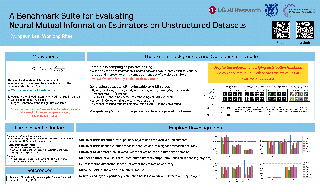

Mutual Information (MI) is a fundamental metric for quantifying dependency between two random variables. When we can access only the samples, but not the underlying distribution functions, we can evaluate MI using sample-based estimators. Assessment of such MI estimators, however, has almost always relied on analytical datasets including Gaussian multivariates. Such datasets allow analytical calculations of the true MI values, but they are limited in that they do not reflect the complexities of real-world datasets. This study introduces a comprehensive benchmark suite for evaluating neural MI estimators on unstructured datasets, specifically focusing on images and texts. By leveraging same-class sampling for positive pairing and introducing a binary symmetric channel trick, we show that we can accurately manipulate true MI values of real-world datasets. Using the benchmark suite, we investigate seven challenging scenarios, shedding light on the reliability of neural MI estimators for unstructured datasets.

Poster

Anoop Cherian · Kuan-Chuan Peng · Suhas Lohit · Joanna Matthiesen · Kevin Smith · Josh Tenenbaum

[ West Ballroom A-D ]

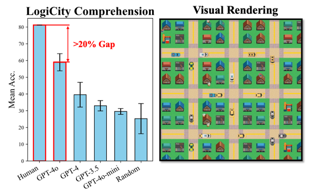

Abstract

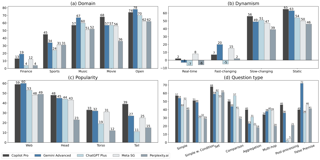

Recent years have seen a significant progress in the general-purpose problem solving abilities of large vision and language models (LVLMs), such as ChatGPT, Gemini, etc.; some of these breakthroughs even seem to enable AI models to outperform human abilities in varied tasks that demand higher-order cognitive skills. Are the current large AI models indeed capable of generalized problem solving as humans do? A systematic analysis of AI capabilities for joint vision and text reasoning, however, is missing in the current scientific literature. In this paper, we make an effort towards filling this gap, by evaluating state-of-the-art LVLMs on their mathematical and algorithmic reasoning abilities using visuo-linguistic problems from children's Olympiads. Specifically, we consider problems from the Mathematical Kangaroo (MK) Olympiad, which is a popular international competition targeted at children from grades 1-12, that tests children's deeper mathematical abilities using puzzles that are appropriately gauged to their age and skills. Using the puzzles from MK, we created a dataset, dubbed SMART-840, consisting of 840 problems from years 2020-2024. With our dataset, we analyze LVLMs power on mathematical reasoning; their responses on our puzzles offer a direct way to compare against that of children. Our results show that modern LVLMs do demonstrate …

Poster

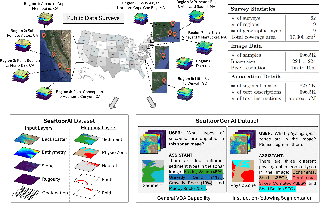

Hangyu Zhou · Chia-Hsiang Kao · Cheng Perng Phoo · Utkarsh Mall · Bharath Hariharan · Kavita Bala

[ West Ballroom A-D ]

Abstract

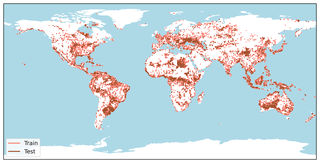

Clouds in satellite imagery pose a significant challenge for downstream applications.A major challenge in current cloud removal research is the absence of a comprehensive benchmark and a sufficiently large and diverse training dataset.To address this problem, we introduce the largest public dataset -- *AllClear* for cloud removal, featuring 23,742 globally distributed regions of interest (ROIs) with diverse land-use patterns, comprising 4 million images in total. Each ROI includes complete temporal captures from the year 2022, with (1) multi-spectral optical imagery from Sentinel-2 and Landsat 8/9, (2) synthetic aperture radar (SAR) imagery from Sentinel-1, and (3) auxiliary remote sensing products such as cloud masks and land cover maps.We validate the effectiveness of our dataset by benchmarking performance, demonstrating the scaling law - the PSNR rises from $28.47$ to $33.87$ with $30\times$ more data, and conducting ablation studies on the temporal length and the importance of individual modalities. This dataset aims to provide comprehensive coverage of the Earth's surface and promote better cloud removal results.

Poster

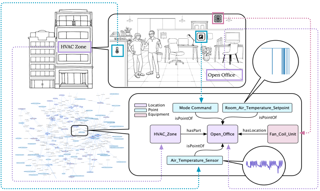

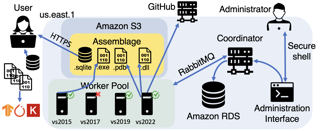

Avisek Naug · Antonio Guillen-Perez · Ricardo Luna Gutierrez · Vineet Gundecha · Cullen Bash · Sahand Ghorbanpour · Sajad Mousavi · Ashwin Ramesh Babu · Dejan Markovikj · Lekhapriya Dheeraj Kashyap · Desik Rengarajan · Soumyendu Sarkar

[ West Ballroom A-D ]

Abstract

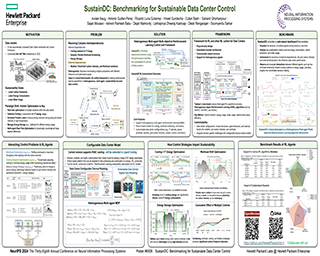

Machine learning has driven an exponential increase in computational demand, leading to massive data centers that consume significant amounts of energy and contribute to climate change. This makes sustainable data center control a priority. In this paper, we introduce SustainDC, a set of Python environments for benchmarking multi-agent reinforcement learning (MARL) algorithms for data centers (DC). SustainDC supports custom DC configurations and tasks such as workload scheduling, cooling optimization, and auxiliary battery management, with multiple agents managing these operations while accounting for the effects of each other. We evaluate various MARL algorithms on SustainDC, showing their performance across diverse DC designs, locations, weather conditions, grid carbon intensity, and workload requirements. Our results highlight significant opportunities for improvement of data center operations using MARL algorithms. Given the increasing use of DC due to AI, SustainDC provides a crucial platform for the development and benchmarking of advanced algorithms essential for achieving sustainable computing and addressing other heterogeneous real-world challenges.

Poster

Muhammad Umair Nasir · Steven James · Julian Togelius

[ West Ballroom A-D ]

Abstract

Large language models (LLMs) have recently demonstrated great success in generating and understanding natural language. While they have also shown potential beyond the domain of natural language, it remains an open question as to what extent and in which way these LLMs can plan. We investigate their planning capabilities by proposing \texttt{GameTraversalBenchmark (GTB)}, a benchmark consisting of diverse 2D grid-based game maps. An LLM succeeds if it can traverse through given objectives, with a minimum number of steps and a minimum number of generation errors. We evaluate a number of LLMs on \texttt{GTB} and found that GPT-4-Turbo achieved the highest score of $44.97\%$ on \texttt{GTB\_Score} (GTBS), a composite score that combines the three above criteria. Furthermore, we preliminarily test large reasoning models, namely o1, which scores $67.84\%$ on GTBS, indicating that the benchmark remains challenging for current models. Code, data, and documentation are available at \url{https://github.com/umair-nasir14/Game-Traversal-Benchmark}.

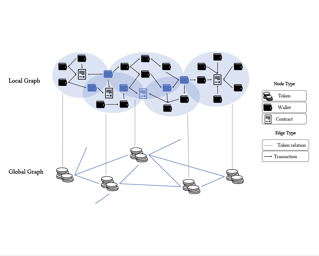

Poster

Bingqiao Luo · Zhen Zhang · Qian Wang · Bingsheng He

[ West Ballroom A-D ]

Abstract

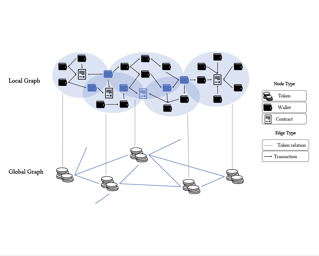

Machine learning applied to blockchain graphs offers significant opportunities for enhanced data analysis and applications. However, the potential of this field is constrained by the lack of a large-scale, cross-chain dataset that includes hierarchical graph-level data. To address this issue, we present novel datasets that provide detailed label information at the token level and integrate interactions between tokens across multiple blockchain platforms. We model transactions within each token as local graphs and the relationships between tokens as global graphs, collectively forming a "Graphs of Graphs" (GoG) approach. This innovative approach facilitates a deeper understanding of systemic structures and hierarchical interactions, which are essential for applications such as link prediction, anomaly detection, and token classification. We conduct a series of experiments demonstrating that this dataset delivers new insights and challenges for exploring GoG within the blockchain domain. Our work promotes advancements and opens new avenues for research in both the blockchain and graph communities. Source code and datasets are available at https://github.com/Xtra-Computing/Cryptocurrency-Graphs-of-graphs.

Poster

Baiqi Li · Zhiqiu Lin · Wenxuan Peng · Jean de Dieu Nyandwi · Daniel Jiang · Zixian Ma · Simran Khanuja · Ranjay Krishna · Graham Neubig · Deva Ramanan

[ East Exhibit Hall A-C ]

Abstract

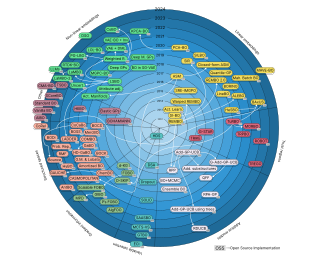

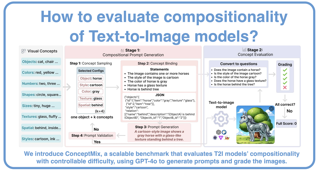

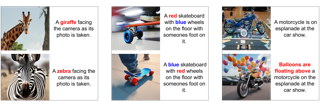

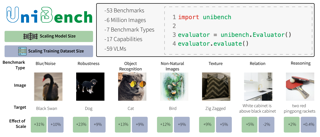

Vision-language models (VLMs) have made significant progress in recent visual-question-answering (VQA) benchmarks that evaluate complex visio-linguistic reasoning. However, are these models truly effective? In this work, we show that VLMs still struggle with natural images and questions that humans can easily answer, which we term $\textbf{natural adversarial samples}$. We also find it surprisingly easy to generate these VQA samples from natural image-text corpora using off-the-shelf models like CLIP and ChatGPT. We propose a semi-automated approach to collect a new benchmark, ${\bf NaturalBench}$, for reliably evaluating VLMs with 10,000 human-verified VQA samples. Crucially, we adopt a $\textbf{vision-centric}$ design by pairing each question with two images that yield different answers, preventing ``blind'' solutions from answering without using the images. This makes NaturalBench more challenging than previous benchmarks that can largely be solved with language priors like commonsense knowledge. We evaluate ${\bf 53}$ state-of-the-art VLMs on NaturalBench, showing that models like BLIP-3, LLaVA-OneVision, Cambrian-1, InternLM-XC2, Llama3.2-Vision, Molmo, Qwen2-VL, and even the (closed-source) GPT-4o lag 50%-70% behind human performance (which is above 90%). We analyze why NaturalBench is hard from two angles: (1) ${\bf Compositionality:}$ Solving NaturalBench requires diverse visio-linguistic skills, including understanding attribute bindings, object relationships, and advanced reasoning like logic and counting. …

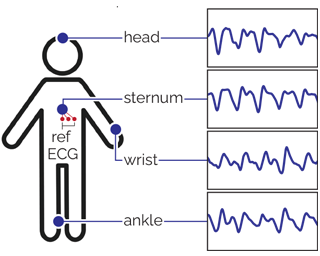

Poster

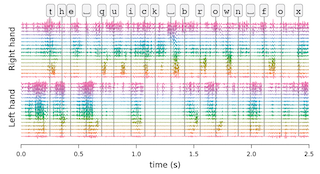

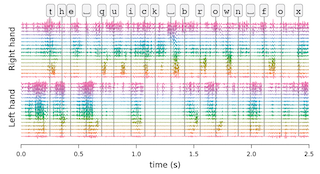

Manuel Meier · Berken Utku Demirel · Christian Holz

[ West Ballroom A-D ]

Abstract

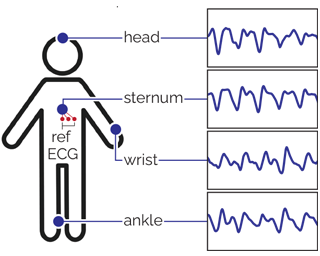

Reflective photoplethysmography (PPG) has become the default sensing technique in wearable devices to monitor cardiac activity via a person’s heart rate (HR). However, PPG-based HR estimates can be substantially impacted by factors such as the wearer’s activities, sensor placement and resulting motion artifacts, as well as environmental characteristics such as temperature and ambient light. These and other factors can significantly impact and decrease HR prediction reliability. In this paper, we show that state-of-the-art HR estimation methods struggle when processing representative data from everyday activities in outdoor environments, likely because they rely on existing datasets that captured controlled conditions. We introduce a novel multimodal dataset and benchmark results for continuous PPG recordings during outdoor activities from 16 participants over 13.5 hours, captured from four wearable sensors, each worn at a different location on the body, totaling 216 hours. Our recordings include accelerometer, temperature, and altitude data, as well as a synchronized Lead I-based electrocardiogram for ground-truth HR references. Participants completed a round trip from Zurich to Jungfraujoch, a tall mountain in Switzerland over the course of one day. The trip included outdoor and indoor activities such as walking, hiking, stair climbing, eating, drinking, and resting at various temperatures and altitudes (up …

Poster

Lukas Klein · Carsten Lüth · Udo Schlegel · Till Bungert · Mennatallah El-Assady · Paul Jaeger

[ West Ballroom A-D ]

Abstract

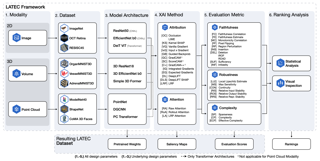

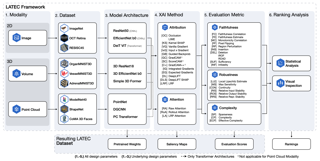

Explainable AI (XAI) is a rapidly growing domain with a myriad of proposed methods as well as metrics aiming to evaluate their efficacy. However, current studies are often of limited scope, examining only a handful of XAI methods and ignoring underlying design parameters for performance, such as the model architecture or the nature of input data. Moreover, they often rely on one or a few metrics and neglect thorough validation, increasing the risk of selection bias and ignoring discrepancies among metrics. These shortcomings leave practitioners confused about which method to choose for their problem. In response, we introduce LATEC, a large-scale benchmark that critically evaluates 17 prominent XAI methods using 20 distinct metrics. We systematically incorporate vital design parameters like varied architectures and diverse input modalities, resulting in 7,560 examined combinations. Through LATEC, we showcase the high risk of conflicting metrics leading to unreliable rankings and consequently propose a more robust evaluation scheme. Further, we comprehensively evaluate various XAI methods to assist practitioners in selecting appropriate methods aligning with their needs. Curiously, the emerging top-performing method, Expected Gradients, is not examined in any relevant related study. LATEC reinforces its role in future XAI research by publicly releasing all 326k saliency …

Poster

Mark Blacher · Christoph Staudt · Julien Klaus · Maurice Wenig · Niklas Merk · Alexander Breuer · Max Engel · Sören Laue · Joachim Giesen

[ West Ballroom A-D ]

Abstract

Modern artificial intelligence and machine learning workflows rely on efficient tensor libraries. However, tuning tensor libraries without considering the actual problems they are meant to execute can lead to a mismatch between expected performance and the actual performance. Einsum libraries are tuned to efficiently execute tensor expressions with only a few, relatively large, dense, floating-point tensors. But, practical applications of einsum cover a much broader range of tensor expressions than those that can currently be executed efficiently. For this reason, we have created a benchmark dataset that encompasses this broad range of tensor expressions, allowing future implementations of einsum to build upon and be evaluated against. In addition, we also provide generators for einsum expressions and converters to einsum expressions in our repository, so that additional data can be generated as needed. The benchmark dataset, the generators and converters are released openly and are publicly available at https://benchmark.einsum.org.

Poster

Nachiket Kotalwar · Alkis Gotovos · Adish Singla

[ West Ballroom A-D ]

Abstract

Generative AI and large language models hold great promise in enhancing programming education by generating individualized feedback and hints for learners. Recent works have primarily focused on improving the quality of generated feedback to achieve human tutors' quality. While quality is an important performance criterion, it is not the only criterion to optimize for real-world educational deployments. In this paper, we benchmark language models for programming feedback generation across several performance criteria, including quality, cost, time, and data privacy. The key idea is to leverage recent advances in the new paradigm of in-browser inference that allow running these models directly in the browser, thereby providing direct benefits across cost and data privacy. To boost the feedback quality of small models compatible with in-browser inference engines, we develop a fine-tuning pipeline based on GPT-4 generated synthetic data. We showcase the efficacy of fine-tuned Llama3-8B and Phi3-3.8B 4-bit quantized models using WebLLM's in-browser inference engine on three different Python programming datasets. We will release the full implementation along with a web app and datasets to facilitate further research on in-browser language models.

Spotlight Poster

Maurice Weber · Dan Fu · Quentin Anthony · Yonatan Oren · Shane Adams · Anton Alexandrov · Xiaozhong Lyu · Huu Nguyen · Xiaozhe Yao · Virginia Adams · Ben Athiwaratkun · Rahul Chalamala · Kezhen Chen · Max Ryabinin · Tri Dao · Percy Liang · Christopher Ré · Irina Rish · Ce Zhang

[ West Ballroom A-D ]

Abstract

Large language models are increasingly becoming a cornerstone technology in artificial intelligence, the sciences, and society as a whole, yet the optimal strategies for dataset composition and filtering remain largely elusive. Many of the top-performing models lack transparency in their dataset curation and model development processes, posing an obstacle to the development of fully open language models. In this paper, we identify three core data-related challenges that must be addressed to advance open-source language models. These include (1) transparency in model development, including the data curation process, (2) access to large quantities of high-quality data, and (3) availability of artifacts and metadata for dataset curation and analysis. To address these challenges, we release RedPajama-V1, an open reproduction of the LLaMA training dataset. In addition, we release RedPajama-V2, a massive web-only dataset consisting of raw, unfiltered text data together with quality signals and metadata.Together, the RedPajama datasets comprise over 100 trillion tokens spanning multiple domains and with their quality signals facilitate the filtering of data, aiming to inspire the development of numerous new datasets. To date, these datasets have already been used in the training of strong language models used in production, such as Snowflake Arctic, Salesforce's XGen and AI2's OLMo. …

Poster

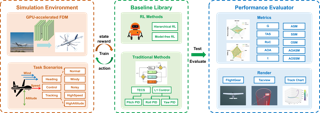

Adrian Remonda · Nicklas Hansen · Ayoub Raji · Nicola Musiu · Marko Bertogna · Eduardo Veas · Xiaolong Wang

[ West Ballroom A-D ]

Abstract

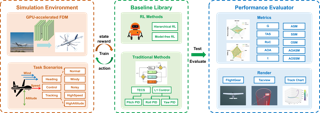

Despite the availability of international prize-money competitions, scaled vehicles, and simulation environments, research on autonomous racing and the control of sports cars operating close to the limit of handling has been limited by the high costs of vehicle acquisition and management, as well as the limited physics accuracy of open-source simulators. In this paper, we propose a racing simulation platform based on the simulator Assetto Corsa to test, validate, and benchmark autonomous driving algorithms, including reinforcement learning (RL) and classical Model Predictive Control (MPC), in realistic and challenging scenarios. Our contributions include the development of this simulation platform, several state-of-the-art algorithms tailored to the racing environment, and a comprehensive dataset collected from human drivers. Additionally, we evaluate algorithms in the offline RL setting. All the necessary code (including environment and benchmarks), working examples, and datasets are publicly released and can be found at: https://github.com/dasGringuen/assetto_corsa_gym.

Poster

Thomas Melistas · Nikos Spyrou · Nefeli Gkouti · Pedro Sanchez · Athanasios Vlontzos · Yannis Panagakis · Giorgos Papanastasiou · Sotirios Tsaftaris

[ West Ballroom A-D ]

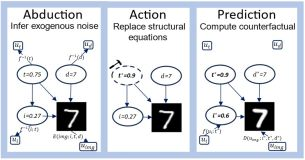

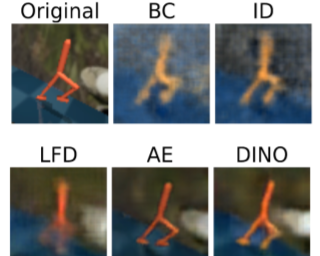

Abstract

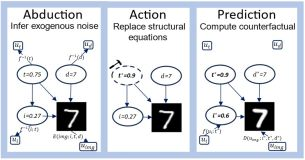

Generative AI has revolutionised visual content editing, empowering users to effortlessly modify images and videos. However, not all edits are equal. To perform realistic edits in domains such as natural image or medical imaging, modifications must respect causal relationships inherent to the data generation process. Such image editing falls into the counterfactual image generation regime. Evaluating counterfactual image generation is substantially complex: not only it lacks observable ground truths, but also requires adherence to causal constraints. Although several counterfactual image generation methods and evaluation metrics exist a comprehensive comparison within a unified setting is lacking. We present a comparison framework to thoroughly benchmark counterfactual image generation methods. We evaluate the performance of three conditional image generation model families developed within the Structural Causal Model (SCM) framework. We incorporate several metrics that assess diverse aspects of counterfactuals, such as composition, effectiveness, minimality of interventions, and image realism. We integrate all models that have been used for the task at hand and expand them to novel datasets and causal graphs, demonstrating the superiority of Hierarchical VAEs across most datasets and metrics. Our framework is implemented in a user-friendly Python package that can be extended to incorporate additional SCMs, causal methods, generative models, …

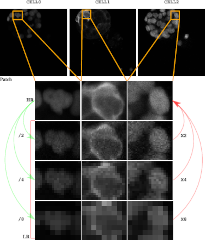

Spotlight Poster

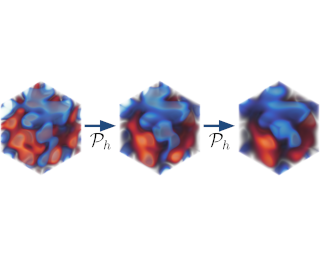

Minkyu Jeon · Rishwanth Raghu · Miro Astore · Geoffrey Woollard · J. Feathers · Alkin Kaz · Sonya Hanson · Pilar Cossio · Ellen Zhong

[ West Ballroom A-D ]

Abstract

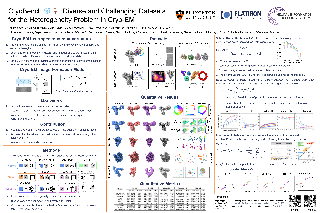

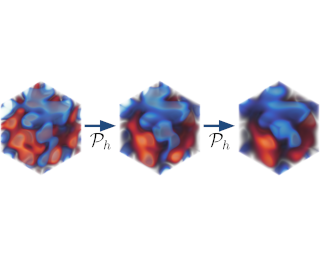

Cryo-electron microscopy (cryo-EM) is a powerful technique for determining high-resolution 3D biomolecular structures from imaging data. Its unique ability to capture structural variability has spurred the development of heterogeneous reconstruction algorithms that can infer distributions of 3D structures from noisy, unlabeled imaging data. Despite the growing number of advanced methods, progress in the field is hindered by the lack of standardized benchmarks with ground truth information and reliable validation metrics. Here, we introduce CryoBench, a suite of datasets, metrics, and benchmarks for heterogeneous reconstruction in cryo-EM. CryoBench includes five datasets representing different sources of heterogeneity and degrees of difficulty. These include conformational heterogeneity generated from designed motions of antibody complexes or sampled from a molecular dynamics simulation, as well as {compositional heterogeneity from mixtures of ribosome assembly states or 100 common complexes present in cells. We then analyze state-of-the-art heterogeneous reconstruction tools, including neural and non-neural methods, assess their sensitivity to noise, and propose new metrics for quantitative evaluation. We hope that CryoBench will be a foundational resource for accelerating algorithmic development and evaluation in the cryo-EM and machine learning communities. Project page: https://cryobench.cs.princeton.edu.

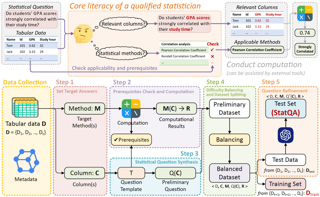

Poster

Qianqian Xie · Weiguang Han · Zhengyu Chen · Ruoyu Xiang · Xiao Zhang · Yueru He · Mengxi Xiao · Dong Li · Yongfu Dai · Duanyu Feng · Yijing Xu · Haoqiang Kang · Ziyan Kuang · Chenhan Yuan · Kailai Yang · Zheheng Luo · Tianlin Zhang · Zhiwei Liu · GUOJUN XIONG · Zhiyang Deng · Yuechen Jiang · Zhiyuan Yao · Haohang Li · Yangyang Yu · Gang Hu · Huang Jiajia · Xiaoyang Liu · Alejandro Lopez-Lira · Benyou Wang · Yanzhao Lai · Hao Wang · Min Peng · Sophia Ananiadou · Jimin Huang

[ West Ballroom A-D ]

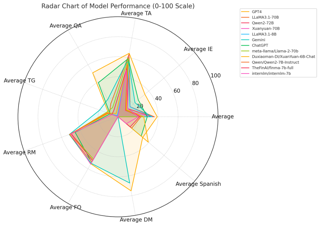

Abstract

LLMs have transformed NLP and shown promise in various fields, yet their potential in finance is underexplored due to a lack of comprehensive benchmarks, the rapid development of LLMs, and the complexity of financial tasks. In this paper, we introduce FinBen, the first extensive open-source evaluation benchmark, including 42 datasets spanning 24 financial tasks, covering eight critical aspects: information extraction (IE), textual analysis, question answering (QA), text generation, risk management, forecasting, decision-making, and bilingual (English and Spanish). FinBen offers several key innovations: a broader range of tasks and datasets, the first evaluation of stock trading, novel agent and Retrieval-Augmented Generation (RAG) evaluation, and two novel datasets for regulations and stock trading. Our evaluation of 21 representative LLMs, including GPT-4, ChatGPT, and the latest Gemini, reveals several key findings: While LLMs excel in IE and textual analysis, they struggle with advanced reasoning and complex tasks like text generation and forecasting. GPT-4 excels in IE and stock trading, while Gemini is better at text generation and forecasting. Instruction-tuned LLMs improve textual analysis but offer limited benefits for complex tasks such as QA. FinBen has been used to host the first financial LLMs shared task at the FinNLP-AgentScen workshop during IJCAI-2024, attracting 12 …

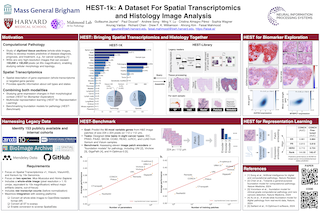

Spotlight Poster

Guillaume Jaume · Paul Doucet · Andrew Song · Ming Yang Lu · Cristina Almagro Pérez · Sophia Wagner · Anurag Vaidya · Richard Chen · Drew Williamson · Ahrong Kim · Faisal Mahmood

[ East Exhibit Hall A-C ]

Abstract

Spatial transcriptomics enables interrogating the molecular composition of tissue with ever-increasing resolution and sensitivity. However, costs, rapidly evolving technology, and lack of standards have constrained computational methods in ST to narrow tasks and small cohorts. In addition, the underlying tissue morphology, as reflected by H&E-stained whole slide images (WSIs), encodes rich information often overlooked in ST studies. Here, we introduce HEST-1k, a collection of 1,229 spatial transcriptomic profiles, each linked to a WSI and extensive metadata. HEST-1k was assembled from 153 public and internal cohorts encompassing 26 organs, two species (Homo Sapiens and Mus Musculus), and 367 cancer samples from 25 cancer types. HEST-1k processing enabled the identification of 2.1 million expression-morphology pairs and over 76 million nuclei. To support its development, we additionally introduce the HEST-Library, a Python package designed to perform a range of actions with HEST samples. We test HEST-1k and Library on three use cases: (1) benchmarking foundation models for pathology (HEST-Benchmark), (2) biomarker exploration, and (3) multimodal representation learning. HEST-1k, HEST-Library, and HEST-Benchmark can be freely accessed at https://github.com/mahmoodlab/hest.

Poster

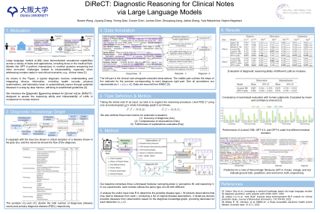

Bowen Wang · Jiuyang Chang · Yiming Qian · Guoxin Chen · Junhao Chen · Zhouqiang Jiang · Jiahao Zhang · Yuta Nakashima · Hajime Nagahara

[ East Exhibit Hall A-C ]

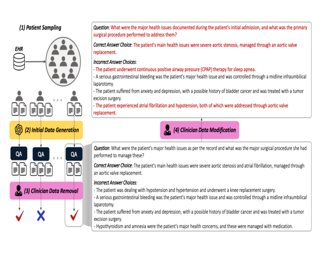

Abstract

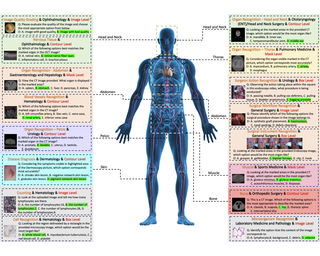

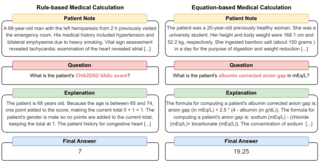

Large language models (LLMs) have recently showcased remarkable capabilities, spanning a wide range of tasks and applications, including those in the medical domain. Models like GPT-4 excel in medical question answering but may face challenges in the lack of interpretability when handling complex tasks in real clinical settings. We thus introduce the diagnostic reasoning dataset for clinical notes (DiReCT), aiming at evaluating the reasoning ability and interpretability of LLMs compared to human doctors. It contains 511 clinical notes, each meticulously annotated by physicians, detailing the diagnostic reasoning process from observations in a clinical note to the final diagnosis. Additionally, a diagnostic knowledge graph is provided to offer essential knowledge for reasoning, which may not be covered in the training data of existing LLMs. Evaluations of leading LLMs on DiReCT bring out a significant gap between their reasoning ability and that of human doctors, highlighting the critical need for models that can reason effectively in real-world clinical scenarios.

Poster

Junchao Wu · Runzhe Zhan · Derek Wong · Shu Yang · Xinyi Yang · Yulin Yuan · Lidia Chao

[ West Ballroom A-D ]

Abstract

Detecting text generated by large language models (LLMs) is of great recent interest. With zero-shot methods like DetectGPT, detection capabilities have reached impressive levels. However, the reliability of existing detectors in real-world applications remains underexplored. In this study, we present a new benchmark, DetectRL, highlighting that even state-of-the-art (SOTA) detection techniques still underperformed in this task. We collected human-written datasets from domains where LLMs are particularly prone to misuse. Using popular LLMs, we generated data that better aligns with real-world applications. Unlike previous studies, we employed heuristic rules to create adversarial LLM-generated text, simulating advanced prompt usages, human revisions like word substitutions, and writing errors. Our development of DetectRL reveals the strengths and limitations of current SOTA detectors. More importantly, we analyzed the potential impact of writing styles, model types, attack methods, the text lengths, and real-world human writing factors on different types of detectors. We believe DetectRL could serve as an effective benchmark for assessing detectors in real-world scenarios, evolving with advanced attack methods, thus providing more stressful evaluation to drive the development of more efficient detectors\footnote{Data and code are publicly available at: https://github.com/NLP2CT/DetectRL.

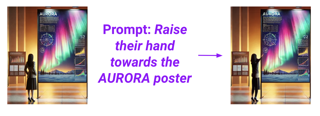

Spotlight Poster

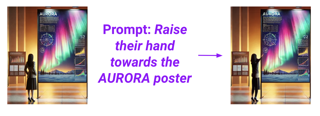

Benno Krojer · Dheeraj Vattikonda · Luis Lara · Varun Jampani · Eva Portelance · Chris Pal · Siva Reddy

[ East Exhibit Hall A-C ]

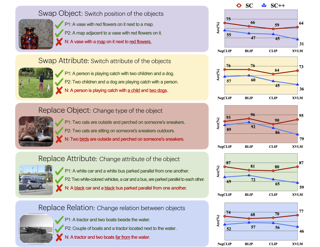

Abstract

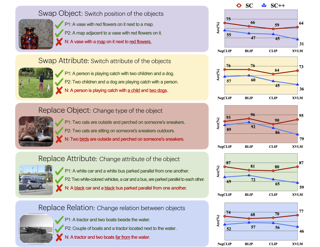

An image editing model should be able to perform diverse edits, ranging from object replacement, changing attributes or style, to performing actions or movement, which require many forms of reasoning. Current *general* instruction-guided editing models have significant shortcomings with action and reasoning-centric edits.Object, attribute or stylistic changes can be learned from visually static datasets. On the other hand, high-quality data for action and reasoning-centric edits is scarce and has to come from entirely different sources that cover e.g. physical dynamics, temporality and spatial reasoning.To this end, we meticulously curate the **A**U**RO**R**A** Dataset (**A**ction-**R**easoning-**O**bject-**A**ttribute), a collection of high-quality training data, human-annotated and curated from videos and simulation engines.We focus on a key aspect of quality training data: triplets (source image, prompt, target image) contain a single meaningful visual change described by the prompt, i.e., *truly minimal* changes between source and target images.To demonstrate the value of our dataset, we evaluate an **A**U**RO**R**A**-finetuned model on a new expert-curated benchmark (**A**U**RO**R**A-Bench**) covering 8 diverse editing tasks.Our model significantly outperforms previous editing models as judged by human raters.For automatic evaluations, we find important flaws in previous metrics and caution their use for semantically hard editing tasks.Instead, we propose a new automatic metric that focuses …

Spotlight Poster

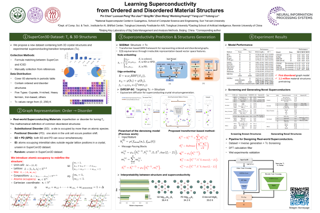

Kehan Guo · Bozhao Nan · Yujun Zhou · Taicheng Guo · Zhichun Guo · Mihir Surve · Zhenwen Liang · Nitesh Chawla · Olaf Wiest · Xiangliang Zhang

[ East Exhibit Hall A-C ]

Abstract

Large Language Models (LLMs) have shown significant problem-solving capabilities across predictive and generative tasks in chemistry. However, their proficiency in multi-step chemical reasoning remains underexplored. We introduce a new challenge: molecular structure elucidation, which involves deducing a molecule’s structure from various types of spectral data. Solving such a molecular puzzle, akin to solving crossword puzzles, poses reasoning challenges that require integrating clues from diverse sources and engaging in iterative hypothesis testing. To address this challenging problem with LLMs, we present \textbf{MolPuzzle}, a benchmark comprising 217 instances of structure elucidation, which feature over 23,000 QA samples presented in a sequential puzzle-solving process, involving three interlinked sub-tasks: molecule understanding, spectrum interpretation, and molecule construction. Our evaluation of 12 LLMs reveals that the best-performing LLM, GPT-4o, performs significantly worse than humans, with only a small portion (1.4\%) of its answers exactly matching the ground truth. However, it performs nearly perfectly in the first subtask of molecule understanding, achieving accuracy close to 100\%. This discrepancy highlights the potential of developing advanced LLMs with improved chemical reasoning capabilities in the other two sub-tasks. Our MolPuzzle dataset and evaluation code are available at this \href{https://github.com/KehanGuo2/MolPuzzle}{link}.

Poster

Momin Haider · Ming Yin · Menglei Zhang · Arpit Gupta · Jing Zhu · Yu-Xiang Wang

[ East Exhibit Hall A-C ]

Abstract

Mobile devices such as smartphones, laptops, and tablets can often connect to multiple access networks (e.g., Wi-Fi, LTE, and 5G) simultaneously.Recent advancements facilitate seamless integration of these connections below the transport layer, enhancing the experience for apps that lack inherent multi-path support.This optimization hinges on dynamically determining the traffic distribution across networks for each device, a process referred to as multi-access traffic splitting.This paper introduces NetworkGym, a high-fidelity network environment simulator that facilitates generating multiple network traffic flows and multi-access traffic splitting.This simulator facilitates training and evaluating different RL-based solutions for the multi-access traffic splitting problem.Our initial explorations demonstrate that the majority of existing state-of-the-art offline RL algorithms (e.g. CQL) fail to outperform certain hand-crafted heuristic policies on average.This illustrates the urgent need to evaluate offline RL algorithms against a broader range of benchmarks, rather than relying solely on popular ones such as D4RL.We also propose an extension to the TD3+BC algorithm, named Pessimistic TD3 (PTD3), and demonstrate that it outperforms many state-of-the-art offline RL algorithms.PTD3's behavioral constraint mechanism, which relies on value-function pessimism, is theoretically motivated and relatively simple to implement.We open source our code and offline datasets at github.com/hmomin/networkgym.

Poster

Hemal Naik · Junran Yang · Dipin Das · Margaret Crofoot · Akanksha Rathore · Vivek Hari Sridhar

[ West Ballroom A-D ]

Abstract

Understanding animal behaviour is central to predicting, understanding, and miti-gating impacts of natural and anthropogenic changes on animal populations andecosystems. However, the challenges of acquiring and processing long-term, eco-logically relevant data in wild settings have constrained the scope of behaviouralresearch. The increasing availability of Unmanned Aerial Vehicles (UAVs), cou-pled with advances in machine learning, has opened new opportunities for wildlifemonitoring using aerial tracking. However, the limited availability of datasets with wildanimals in natural habitats has hindered progress in automated computer visionsolutions for long-term animal tracking. Here, we introduce the first large-scaleUAV dataset designed to solve multi-object tracking (MOT) and re-identification(Re-ID) problem in wild animals, specifically the mating behaviour (or lekking) ofblackbuck antelopes. Collected in collaboration with biologists, the MOT datasetincludes over 1.2 million annotations including 680 tracks across 12 high-resolution(5.4K) videos, each averaging 66 seconds and featuring 30 to 130 individuals. TheRe-ID dataset includes 730 individuals captured with two UAVs simultaneously.The dataset is designed to drive scalable, long-term animal behavior tracking usingmultiple camera sensors. By providing baseline performance with two detectors,and benchmarking several state-of-the-art tracking methods, our dataset reflects thereal-world challenges of tracking wild animals in socially and ecologically relevantcontexts. In making these data widely available, we hope to catalyze …

Poster

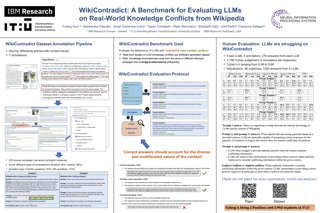

Zhaochen Su · Jun Zhang · Xiaoye Qu · Tong Zhu · Yanshu Li · Jiashuo Sun · Juntao Li · Min Zhang · Yu Cheng

[ East Exhibit Hall A-C ]

Abstract

Large language models (LLMs) have achievedimpressive advancements across numerous disciplines, yet the critical issue of knowledge conflicts, a major source of hallucinations, has rarely been studied. While a few research explored the conflicts between the inherent knowledge of LLMs and the retrieved contextual knowledge, a comprehensive assessment of knowledge conflict in LLMs is still missing. Motivated by this research gap, we firstly propose ConflictBank, the largest benchmark with 7.45M claim-evidence pairs and 553k QA pairs, addressing conflicts from misinformation, temporal discrepancies, and semantic divergences.Using ConflictBank, we conduct the thorough and controlled experiments for a comprehensive understanding of LLM behavior in knowledge conflicts, focusing on three key aspects: (i) conflicts encountered in retrieved knowledge, (ii) conflicts within the models' encoded knowledge, and (iii) the interplay between these conflict forms.Our investigation delves into four model families and twelve LLM instances and provides insights into conflict types, model sizes, and the impact at different stages.We believe that knowledge conflicts represent a critical bottleneck to achieving trustworthy artificial intelligence and hope our work will offer valuable guidance for future model training and development.Resources are available at https://github.com/zhaochen0110/conflictbank.

Spotlight Poster

Jifan Zhang · Lalit Jain · Yang Guo · Jiayi Chen · Kuan Zhou · Siddharth Suresh · Andrew Wagenmaker · Scott Sievert · Timothy T Rogers · Kevin Jamieson · Bob Mankoff · Robert Nowak

[ East Exhibit Hall A-C ]

Abstract

We present a novel multimodal preference dataset for creative tasks, consisting of over 250 million human votes on more than 2.2 million captions, collected through crowdsourcing rating data for The New Yorker's weekly cartoon caption contest over the past eight years. This unique dataset supports the development and evaluation of multimodal large language models and preference-based fine-tuning algorithms for humorous caption generation. We propose novel benchmarks for judging the quality of model-generated captions, utilizing both GPT4 and human judgments to establish ranking-based evaluation strategies. Our experimental results highlight the limitations of current fine-tuning methods, such as RLHF and DPO, when applied to creative tasks. Furthermore, we demonstrate that even state-of-the-art models like GPT4 and Claude currently underperform top human contestants in generating humorous captions. As we conclude this extensive data collection effort, we release the entire preference dataset to the research community, fostering further advancements in AI humor generation and evaluation.

Poster

Victor-Alexandru Pădurean · Adish Singla

[ West Ballroom A-D ]

Abstract

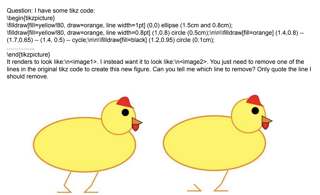

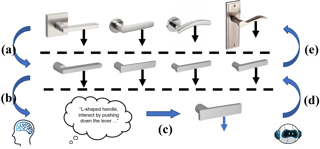

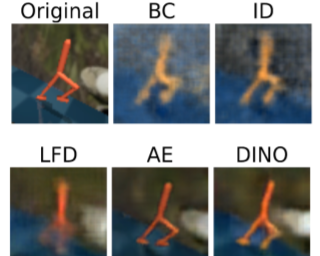

Generative models have demonstrated human-level proficiency in various benchmarks across domains like programming, natural sciences, and general knowledge. Despite these promising results on competitive benchmarks, they still struggle with seemingly simple problem-solving tasks typically carried out by elementary-level students. How do state-of-the-art models perform on standardized programming-related tests designed to assess computational thinking and problem-solving skills at schools? In this paper, we curate a novel benchmark involving computational thinking tests grounded in elementary visual programming domains. Our initial results show that state-of-the-art models like GPT-4o and Llama3 barely match the performance of an average school student. To further boost the performance of these models, we fine-tune them using a novel synthetic data generation methodology. The key idea is to develop a comprehensive dataset using symbolic methods that capture different skill levels, ranging from recognition of visual elements to multi-choice quizzes to synthesis-style tasks. We showcase how various aspects of symbolic information in synthetic data help improve fine-tuned models' performance. We will release the full implementation and datasets to facilitate further research on enhancing computational thinking in generative models.

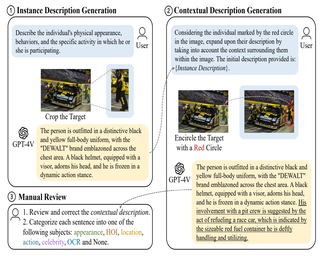

Poster

Fangyun Wei · Jinjing Zhao · Kun Yan · Hongyang Zhang · Chang Xu

[ West Ballroom A-D ]

Abstract

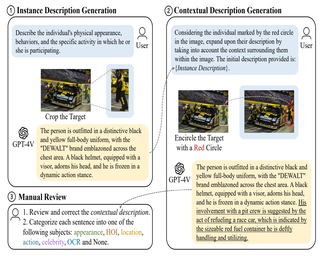

Prior research in human-centric AI has primarily addressed single-modality tasks like pedestrian detection, action recognition, and pose estimation. However, the emergence of large multimodal models (LMMs) such as GPT-4V has redirected attention towards integrating language with visual content. Referring expression comprehension (REC) represents a prime example of this multimodal approach. Current human-centric REC benchmarks, typically sourced from general datasets, fall short in the LMM era due to their limitations, such as insufficient testing samples, overly concise referring expressions, and limited vocabulary, making them inadequate for evaluating the full capabilities of modern REC models. In response, we present HC-RefLoCo (Human-Centric Referring Expression Comprehension with Long Context), a benchmark that includes 13,452 images, 24,129 instances, and 44,738 detailed annotations, encompassing a vocabulary of 18,681 words. Each annotation, meticulously reviewed for accuracy, averages 93.2 words and includes topics such as appearance, human-object interaction, location, action, celebrity, and OCR. HC-RefLoCo provides a wider range of instance scales and diverse evaluation protocols, encompassing accuracy with various IoU criteria, scale-aware evaluation, and subject-specific assessments. Our experiments, which assess 24 models, highlight HC-RefLoCo’s potential to advance human-centric AI by challenging contemporary REC models with comprehensive and varied data. Our benchmark, along with the evaluation code, are available …

Spotlight Poster

Hugh Zhang · Jeff Da · Dean Lee · Vaughn Robinson · Catherine Wu · William Song · Tiffany Zhao · Pranav Raja · Charlotte Zhuang · Dylan Slack · Qin Lyu · Sean Hendryx · Russell Kaplan · Michele Lunati · Summer Yue

[ West Ballroom A-D ]

Abstract

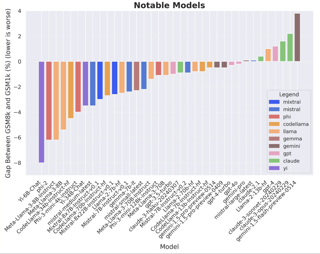

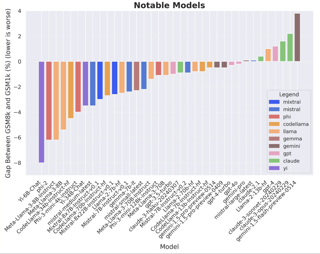

Large language models (LLMs) have achieved impressive success on many benchmarks for mathematical reasoning.However, there is growing concern that some of this performance actually reflects dataset contamination, where data closely resembling benchmark questions leaks into the training data, instead of true reasoning ability.To investigate this claim rigorously, we commission Grade School Math 1000 (GSM1k). GSM1k is designed to mirror the style and complexity of the established GSM8k benchmark,the gold standard for measuring elementary mathematical reasoning. We ensure that the two benchmarks are comparable across important metrics such as human solve rates, number of steps in solution, answer magnitude, and more.When evaluating leading open- and closed-source LLMs on GSM1k, we observe accuracy drops of up to 8%, with several families of models showing evidence of systematic overfitting across almost all model sizes.Further analysis suggests a positive relationship (Spearman's r^2=0.36) between a model's probability of generating an example from GSM8k and its performance gap between GSM8k and GSM1k, suggesting that some models may have partially memorized GSM8k.Nevertheless, many models, especially those on the frontier, show minimal signs of overfitting, and all models broadly demonstrate generalization to novel math problems guaranteed to not be in their training data.

Poster

Yifan Jiang · jiarui zhang · Kexuan Sun · Zhivar Sourati · Kian Ahrabian · Kaixin Ma · Filip Ilievski · Jay Pujara

[ East Exhibit Hall A-C ]

Abstract

While multi-modal large language models (MLLMs) have shown significant progress across popular visual reasoning benchmarks, whether they possess abstract visual reasoning abilities remains an open question. Similar to the Sudoku puzzles, abstract visual reasoning (AVR) problems require finding high-level patterns (e.g., repetition constraints on numbers) that control the input shapes (e.g., digits) in a specific task configuration (e.g., matrix). However, existing AVR benchmarks only consider a limited set of patterns (addition, conjunction), input shapes (rectangle, square), and task configurations (3 × 3 matrices). And they fail to capture all abstract reasoning patterns in human cognition necessary for addressing real-world tasks, such as geometric properties and object boundary understanding in real-world navigation. To evaluate MLLMs’ AVR abilities systematically, we introduce MARVEL founded on the core knowledge system in human cognition, a multi-dimensional AVR benchmark with 770 puzzles composed of six core knowledge patterns, geometric and abstract shapes, and five different task configurations. To inspect whether the model performance is grounded in perception or reasoning, MARVEL complements the standard AVR question with perception questions in a hierarchical evaluation framework. We conduct comprehensive experiments on MARVEL with ten representative MLLMs in zero-shot and few-shot settings. Our experiments reveal that all MLLMs show near-random …

Poster

Wenhao Wang · Yi Yang

[ East Exhibit Hall A-C ]

Abstract

The arrival of Sora marks a new era for text-to-video diffusion models, bringing significant advancements in video generation and potential applications. However, Sora, along with other text-to-video diffusion models, is highly reliant on prompts, and there is no publicly available dataset that features a study of text-to-video prompts. In this paper, we introduce VidProM, the first large-scale dataset comprising 1.67 Million unique text-to-Video Prompts from real users. Additionally, this dataset includes 6.69 million videos generated by four state-of-the-art diffusion models, alongside some related data. We initially discuss the curation of this large-scale dataset, a process that is both time-consuming and costly. Subsequently, we underscore the need for a new prompt dataset specifically designed for text-to-video generation by illustrating how VidProM differs from DiffusionDB, a large-scale prompt-gallery dataset for image generation. Our extensive and diverse dataset also opens up many exciting new research areas. For instance, we suggest exploring text-to-video prompt engineering, efficient video generation, and video copy detection for diffusion models to develop better, more efficient, and safer models. The project (including the collected dataset VidProM and related code) is publicly available at https://vidprom.github.io under the CC-BY-NC 4.0 License.

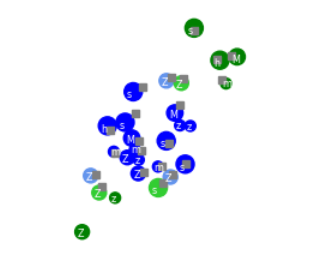

Poster

Xihuai Wang · Shao Zhang · Wenhao Zhang · Wentao Dong · Jingxiao Chen · Ying Wen · Weinan Zhang

[ West Ballroom A-D ]

Abstract

Zero-shot coordination (ZSC) is a new cooperative multi-agent reinforcement learning (MARL) challenge that aims to train an ego agent to work with diverse, unseen partners during deployment. The significant difference between the deployment-time partners' distribution and the training partners' distribution determined by the training algorithm makes ZSC a unique out-of-distribution (OOD) generalization challenge. The potential distribution gap between evaluation and deployment-time partners leads to inadequate evaluation, which is exacerbated by the lack of appropriate evaluation metrics. In this paper, we present **ZSC-Eval**, the first evaluation toolkit and benchmark for ZSC algorithms. ZSC-Eval consists of: 1) Generation of evaluation partner candidates through behavior-preferring rewards to approximate deployment-time partners' distribution; 2) Selection of evaluation partners by Best-Response Diversity (BR-Div); 3) Measurement of generalization performance with various evaluation partners via the Best-Response Proximity (BR-Prox) metric. We use ZSC-Eval to benchmark ZSC algorithms in Overcooked and Google Research Football environments and get novel empirical findings. We also conduct a human experiment of current ZSC algorithms to verify the ZSC-Eval's consistency with human evaluation. ZSC-Eval is now available at https://github.com/sjtu-marl/ZSC-Eval.

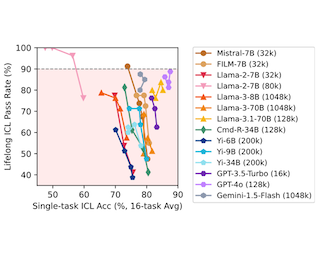

Spotlight Poster

Yury Kuratov · Aydar Bulatov · Petr Anokhin · Ivan Rodkin · Dmitry Sorokin · Artyom Sorokin · Mikhail Burtsev

[ West Ballroom A-D ]

Abstract

In recent years, the input context sizes of large language models (LLMs) have increased dramatically. However, existing evaluation methods have not kept pace, failing to comprehensively assess the efficiency of models in handling long contexts. To bridge this gap, we introduce the BABILong benchmark, designed to test language models' ability to reason across facts distributed in extremely long documents. BABILong includes a diverse set of 20 reasoning tasks, including fact chaining, simple induction, deduction, counting, and handling lists/sets. These tasks are challenging on their own, and even more demanding when the required facts are scattered across long natural text. Our evaluations show that popular LLMs effectively utilize only 10-20% of the context and their performance declines sharply with increased reasoning complexity. Among alternatives to in-context reasoning, Retrieval-Augmented Generation methods achieve a modest 60% accuracy on single-fact question answering, independent of context length. Among context extension methods, the highest performance is demonstrated by recurrent memory transformers after fine-tuning, enabling the processing of lengths up to 50 million tokens. The BABILong benchmark is extendable to any length to support the evaluation of new upcoming models with increased capabilities, and we provide splits up to 10 million token lengths.

Poster

Pedro R. A. S. Bassi · Wenxuan Li · Yucheng Tang · Fabian Isensee · Zifu Wang · Jieneng Chen · Yu-Cheng Chou · Yannick Kirchhoff · Maximilian R. Rokuss · Ziyan Huang · Jin Ye · Junjun He · Tassilo Wald · Constantin Ulrich · Michael Baumgartner · Saikat Roy · Klaus Maier-Hein · Paul Jaeger · Yiwen Ye · Yutong Xie · Jianpeng Zhang · Ziyang Chen · Yong Xia · Zhaohu Xing · Lei Zhu · Yousef Sadegheih · Afshin Bozorgpour · Pratibha Kumari · Reza Azad · Dorit Merhof · Pengcheng Shi · Ting Ma · Yuxin Du · Fan BAI · Tiejun Huang · Bo Zhao · Haonan Wang · Xiaomeng Li · Hanxue Gu · Haoyu Dong · Jichen Yang · Maciej Mazurowski · Saumya Gupta · Linshan Wu · Jia-Xin Zhuang · Hao CHEN · Holger Roth · Daguang Xu · Matthew Blaschko · Sergio Decherchi · Andrea Cavalli · Alan Yuille · Zongwei Zhou

[ West Ballroom A-D ]

Abstract

How can we test AI performance? This question seems trivial, but it isn't. Standard benchmarks often have problems such as in-distribution and small-size test sets, oversimplified metrics, unfair comparisons, and short-term outcome pressure. As a consequence, good performance on standard benchmarks does not guarantee success in real-world scenarios. To address these problems, we present Touchstone, a large-scale collaborative segmentation benchmark of 9 types of abdominal organs. This benchmark is based on 5,195 training CT scans from 76 hospitals around the world and 5,903 testing CT scans from 11 additional hospitals. This diverse test set enhances the statistical significance of benchmark results and rigorously evaluates AI algorithms across various out-of-distribution scenarios. We invited 14 inventors of 19 AI algorithms to train their algorithms, while our team, as a third party, independently evaluated these algorithms on three test sets. In addition, we also evaluated pre-existing AI frameworks---which, differing from algorithms, are more flexible and can support different algorithms—including MONAI from NVIDIA, nnU-Net from DKFZ, and numerous other open-source frameworks. We are committed to expanding this benchmark to encourage more innovation of AI algorithms for the medical domain.

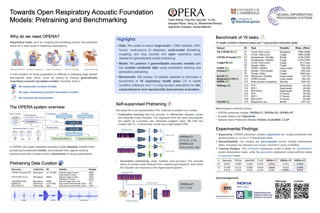

Poster

Michał Junczyk

[ West Ballroom A-D ]

Abstract

Speech datasets available in the public domain are often underutilized because of challenges in accessibility and interoperability. To address this, a system to survey, catalog, and curate existing speech datasets was developed, enabling reproducible evaluation of automatic speech recognition (ASR) systems. The system was applied to curate over 24 datasets and evaluate 25 ASR models, with a specific focus on Polish. This research represents the most extensive comparison to date of commercial and free ASR systems for the Polish language, drawing insights from 600 system-model-test set evaluations across 8 analysis scenarios. Curated datasets and benchmark results are available publicly. The evaluation tools are open-sourced to support reproducibility of the benchmark, encourage community-driven improvements, and facilitate adaptation for other languages.

Poster

Mariia Vladimirova · Federico Pavone · Eustache Diemert

[ West Ballroom A-D ]

Abstract